Abstract:

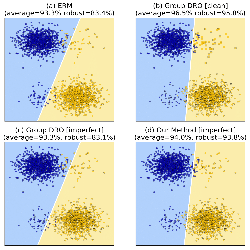

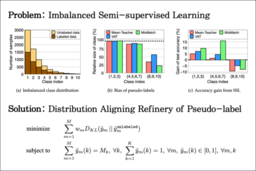

Real-world datasets are often biased with respect to key demographic factors such as race and gender. Due to the latent nature of the underlying factors, detecting and mitigating bias is especially challenging for unsupervised machine learning. We present a weakly supervised algorithm for overcoming dataset bias for deep generative models. Our approach requires access to an additional small, unlabeled but unbiased dataset as the supervision signal, thus sidestepping the need for explicit labels on the underlying bias factors. Using this supplementary dataset, we detect the bias in existing datasets via a density ratio technique and learn generative models which efficiently achieve the twin goals of: 1) data efficiency by using training examples from both biased and unbiased datasets for learning, 2) unbiased data generation at test time. Empirically, we demonstrate the efficacy of our approach which reduces bias w.r.t. latent factors by 37.2% on average over baselines for comparable image generation using generative adversarial networks.