Abstract:

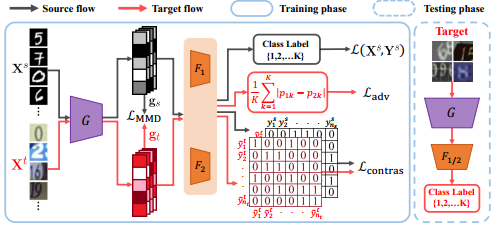

Neural image and text encoders have been proposed to align the abstract image and symbolic text representation. Global-local and local-local information integration between two modalities are essential for an effective alignment. In this paper, we present RELation-aware Adaptive Cross-attention (RELAX) that achieves state-of-the-art performance in cross-modal retrieval tasks by incorporating several novel improvements. First, cross-attention methods integrate global-local information via weighted global feature of a modality (taken as value) for a local feature of the other modality (taken as query). We can make more accurate alignments if we could also consider the global weights of the query modality. To this end, we introduce adaptive embedding to consider the weights. Second, to enhance the usage of scene-graphs that can capture the high-level relation of local features, we introduce transformer encoders for textual scene graphs to align with visual scene graphs. Lastly, we use NT-XEnt loss that takes the weighted sum of the samples based on their importance. We show that our approach is effective in extensive experiments that outperform other state-of-the-art models.