Abstract:

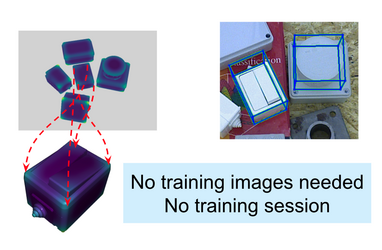

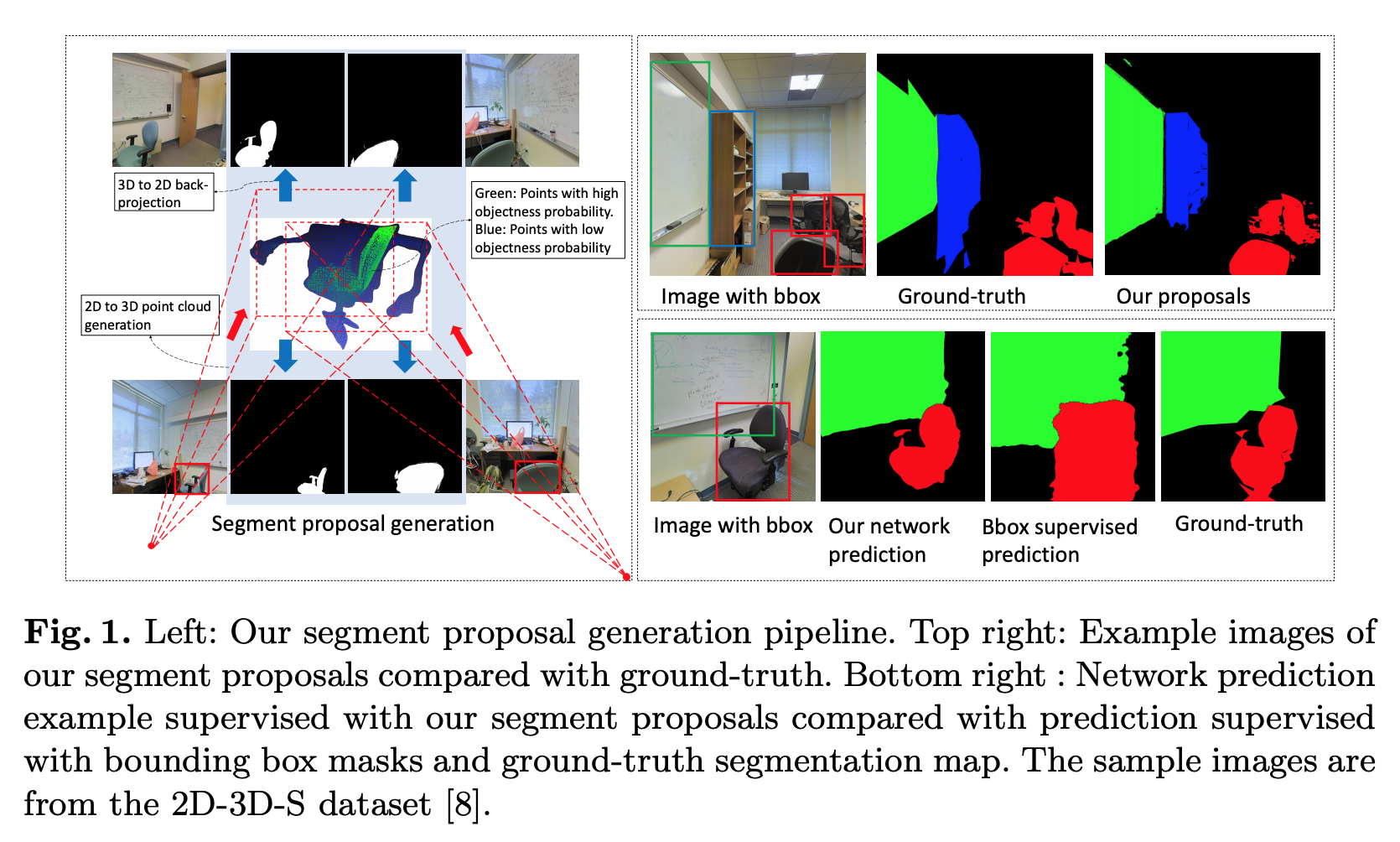

Passive methods for object detection and segmentation treat images of the same scene as individual samples, and do not exploit object permanence across multiple views. Generalization to novel or difficult viewpoints thus requires additional training with lots of annotations. In contrast, humans often recognize objects by simply moving around, to get more informative viewpoints. In this paper, we propose a method for improving object detection in testing environments, assuming nothing but an embodied agent with a pre-trained 2D object detector. Our agent collects multi-view data, generates 2D and 3D pseudo-labels, and fine-tunes its detector in a self-supervised manner. Experiments on both indoor and outdoor datasets show that (1) our method obtains high quality 2D and 3D pseudo-labels from multi-view RGB-D data; (2) fine-tuning with these pseudo-labels improves the 2D detector significantly in the test environment; (3) training a 3D detector with our pseudo-labels outperforms a prior self-supervised method by a large margin; (4) given weak supervision, our method can generate better pseudo-labels for novel objects.