Abstract:

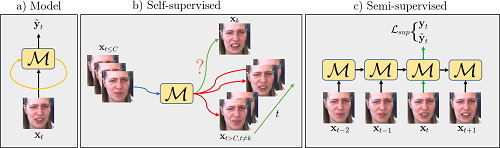

We study the problem of learning to assign a characteristic pose, i.e., scale and orientation, for an image region of interest. Despite its apparent simplicity, the problem is non-trivial; it is hard to obtain a large-scale set of image regions with explicit pose annotations that a model directly learns from. To tackle the issue, we propose a self-supervised learning framework with a histogram alignment technique. We generate pairs of image regions by random scaling and rotating and then train a model to predict their distributions so that their relative difference is consistent with scaling and rotating being used. The proposed method learns a non-parametric and multi-modal distribution of scale and orientation without any supervision, achieving a significant improvement over previous methods in experimental evaluation.