Abstract:

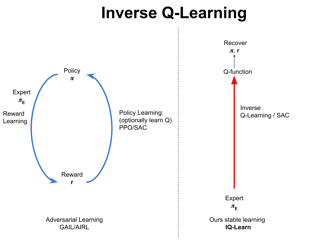

Deep reinforcement learning (DRL) algorithms have been demonstrated to be effective on a wide range of challenging decision making and control tasks. However, these methods typically suffer from severe action oscillations in particular in discrete action setting, which means that agents select different actions within consecutive steps even though states only slightly differ. This issue is often neglected since we usually evaluate the quality of a policy using cumulative rewards only. Action oscillation strongly affects the user experience and even causes serious potential security menace especially in real-world domains with the main concern of safety, such as autonomous driving. In this paper, we introduce Policy Inertia Controller (PIC) which serves as a generic plug-in framework to off-the-shelf DRL algorithms, to enable adaptive balance between the optimality and smoothness in a formal way. We propose Nested Policy Iteration as a general training algorithm for PIC-augmented policy which ensures monotonically non-decreasing updates.Further, we derive a practical DRL algorithm, namely Nested Soft Actor-Critic. Experiments on a collection of autonomous driving tasks and several Atari games suggest that our approach demonstrates substantial oscillation reduction than a range of commonly adopted baselines with almost no performance degradation.