Abstract:

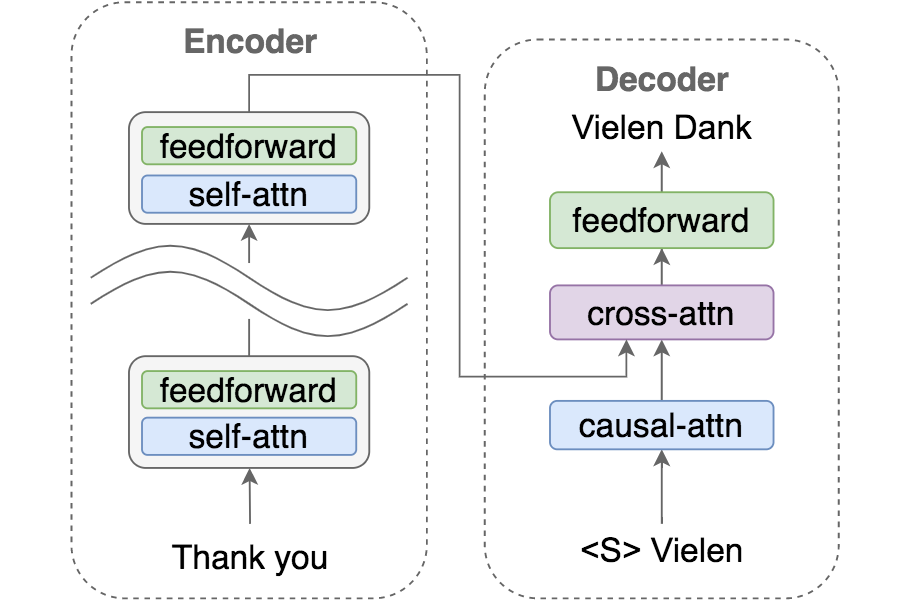

Lexically constrained neural machine translation (NMT), which leverages pre-specified translation to constrain NMT, has practical significance in interactive translation and NMT domain adaption. Previous work either modify the decoding algorithm or train the model on augmented dataset. These methods suffer from either high computational overheads or low copying success rates. In this paper, we investigate Att-Input and Att-Output, two alignment-based constrained decoding methods. These two methods revise the target tokens during decoding based on word alignments derived from encoder-decoder attention weights. Our study shows that Att-Input translates better while Att-Output is more computationally efficient. Capitalizing on both strengths, we further propose EAM-Output by introducing an explicit alignment module (EAM) to a pretrained Transformer. It decodes similarly as EAM-Output, except using alignments derived from the EAM. We leverage the word alignments induced from Att-Input as labels and train the EAM while keeping the parameters of the Transformer frozen. Experiments on WMT16 De-En and WMT16 Ro-En show the effectiveness of our approaches on constrained NMT. In particular, the proposed EAM-Output method consistently outperforms previous approaches in translation quality, with light computational overheads over unconstrained baseline.