Abstract:

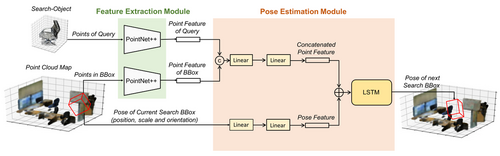

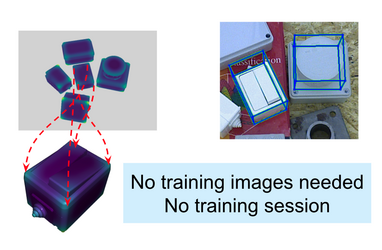

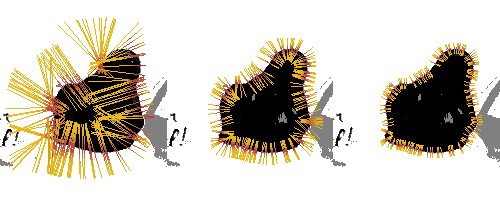

Efficient pose estimation finds utility in Augmented Reality (AR) and other computer vision applications such as autonomous navigation and robotics, to name a few. A compact and accurate pose estimation methodology is of paramount importance for on-device inference in such applications. Our proposed solution 3DPoseLite, estimates pose of generic objects by utilizing a compact node embedding representation, unlike computationally expensive multi-view and point-cloud representations. The neural network outputs a 3D pose, taking RGB image and its corresponding graph (obtained by skeletonizing the 3D meshes) as inputs. Our approach utilizes node2vec framework to learn low-dimensional representations for nodes in a graph by optimizing a neighborhood preserving objective. We achieve a space and time reduction by a factor of 11x and 3x respectively, with respect to the state-of-the-art approach, PoseFromShape, on benchmark Pascal3D dataset. We also test the performance of our model on unseen data using Pix3D dataset.