Abstract:

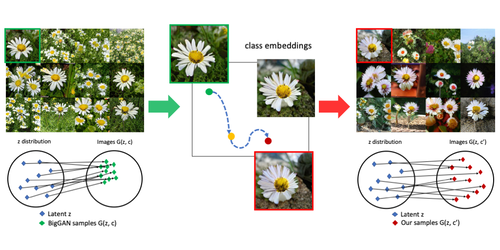

As one of the largest B2C e-commerce platforms in China, JD.com also powers a leading advertising system, serving millions of advertisers with fingertip connection to hundreds of millions of customers. In our system, as well as most e-commerce scenarios, ads are displayed with images. This makes visual-aware Click Through Rate (CTR) prediction of crucial importance to both business effectiveness and user experience. Existing algorithms usually extract visual features using off-the-shelf Convolutional Neural Networks (CNNs) and late fuse the visual and non-visual features for the finally predicted CTR. Despite being extensively studied, this field still face two key challenges. First, although encouraging progress has been made in offline studies, applying CNNs in real systems remains non-trivial, due to the strict requirements for efficient end-to-end training and low-latency online serving. Second, the off-the-shelf CNNs and late fusion architectures are suboptimal. Specifically, off-the-shelf CNNs were designed for classification thus never take categories as input features. While in e-commerce, categories are precisely labeled and contain abundant visual priors that will help the visual modeling. Unaware of the ad category, these CNNs may extract some unnecessary category-unrelated features, wasting CNN’s limited expression ability. To overcome the two challenges, we propose Category-specific CNN (CSCNN) specially for CTR prediction. CSCNN early incorporates the category knowledge with a light-weighted attention-module on each convolutional layer. This enables CSCNN to extract expressive category-specific visual patterns that benefit the CTR prediction. Offline experiments on benchmark and a 10 billion scale real production dataset from JD, together with an Online A/B test show that CSCNN outperforms all compared state-of-the-art algorithms. We also build a highly efficient infrastructure to accomplish end-to-end training with CNN on the 10 billion scale real production dataset within 24 hours, and meet the low latency requirements of online system (20ms on CPU). CSCNN is now deployed in the search advertising system of JD, serving the main traffic of hundreds of millions of active users.