Abstract:

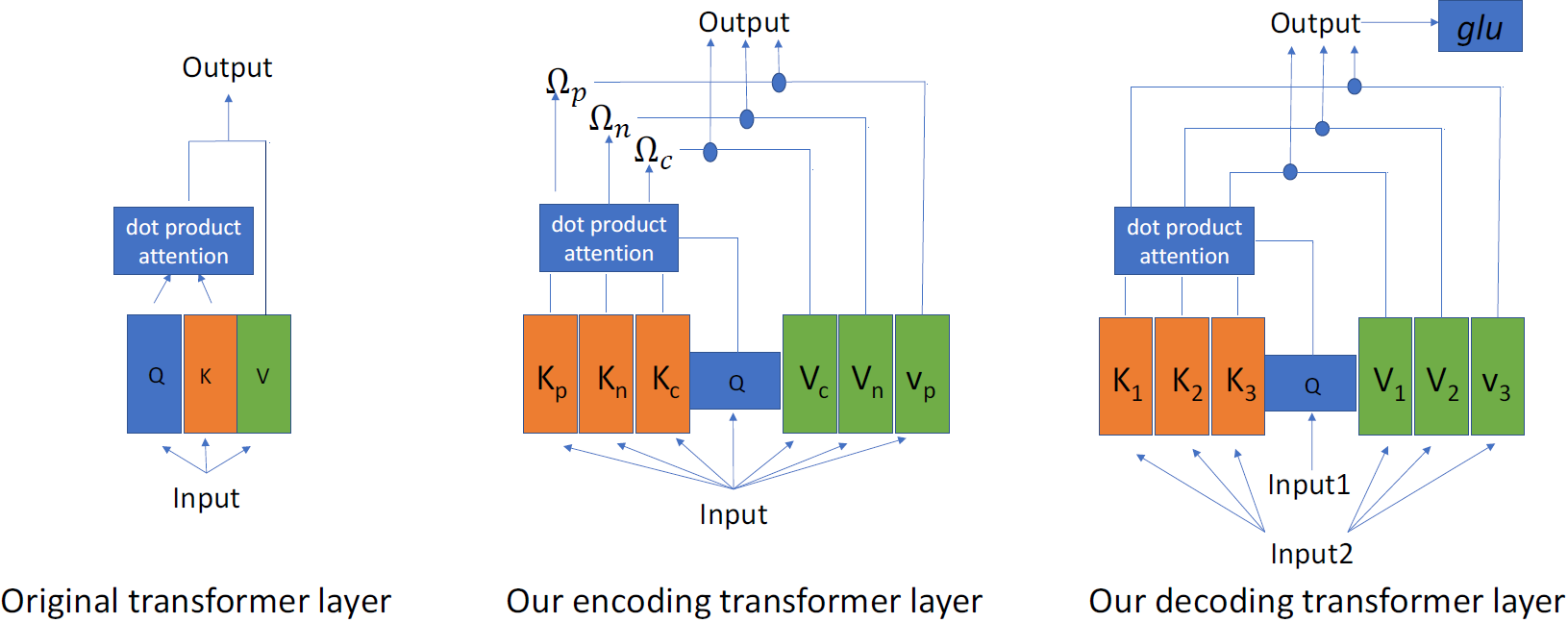

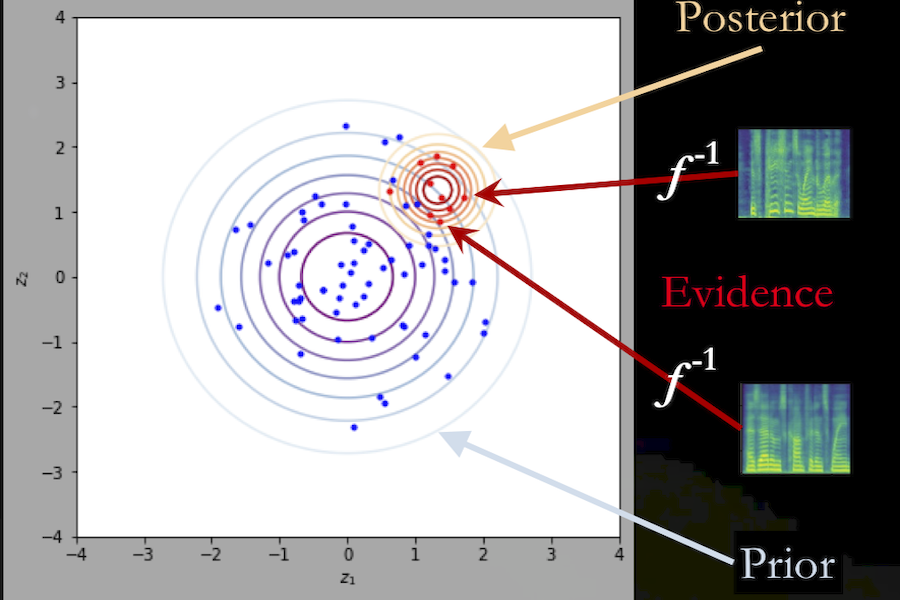

Automated audio captioning is the task that generates text description of a piece of audio. This paper proposes a solution of automated audio captioning based on a combination of pre-trained CNN layers and a sequence-to-sequence architecture based on Transformer. The pre-trained CNN layers are adopted from a CNN based neural network for acoustic event tagging, which makes the latent variable resulted more efficient on generating captions. Transformer decoder is used in the sequence-to-sequence architecture as a consequence of comparing the performance of the more classical LSTM layers. The proposed system achieves a SPIDEr score of 0.227 for the DCASE challenge 2020 Task 6 with data augmentation and label smoothing applied.