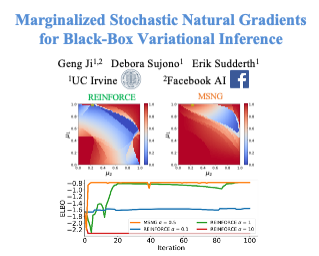

Abstract:

We examine the accuracy of black box variational posterior approximations for parametric models in a probabilistic programming context. The performance of these approximations depends on (1) how well the variational family approximates the true posterior distribution, (2) the choice of divergence, and (3) the optimization of the variational objective. We show that even when the true variational family is used, high-dimensional posteriors can be very poorly approximated using common stochastic gradient descent (SGD) optimizers. Motivated by recent theory, we propose a simple and parallel way to improve SGD estimates for variational inference. The approach is theoretically motivated and comes with a diagnostic for convergence and a novel stopping rule, which is robust to noisy objective

functions evaluations. We show empirically, the new workflow works well on a diverse set of models and datasets, or warns if the stochastic optimization fails or if the used variational distribution is not good.