Abstract:

Machine learning models, especially deep neural networks have been shown to reveal membership information of inputs in the training data.

Such membership inference attacks are a serious

privacy concern, for example, patients providing

medical records to build a model that detects HIV

would not want their identity to be leaked. Further, we show that the attack accuracy amplifies when the model is used to predict samples that

come from a different distribution than the training set, which is often the case in real world applications. Therefore, we propose the use of causal

learning approaches where a model learns the

causal relationship between the input features and

the outcome. An ideal causal model is known

to be invariant to the training distribution and

hence generalizes well to shifts between samples

from the same distribution and across different

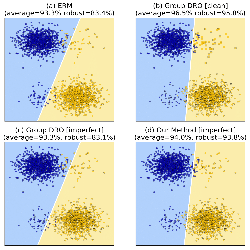

distributions. First, we prove that models learned

using causal structure provide stronger differential privacy guarantees than associational models under reasonable assumptions. Next, we show

that causal models trained on sufficiently large

samples are robust to membership inference attacks across different distributions of datasets and those trained on smaller sample sizes always have

lower attack accuracy than corresponding associational models. Finally, we confirm our theoretical claims with experimental evaluation on 4 datasets

with moderately complex Bayesian networks and

an image dataset of colored MNIST. We observe that neural

network-based associational models exhibit upto

80% attack accuracy under different test distributions and sample sizes whereas causal models exhibit attack accuracy close to a random guess.

Our results confirm the value of the generalizability of causal models in reducing susceptibility to privacy attacks.