Abstract:

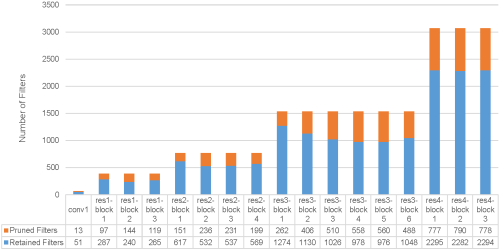

Channel pruning is a popular technique for compressing convolutional neural networks (CNNs), where various pruning criteria have been proposed to remove the redundant filters. From our comprehensive experiments, we found two blind spots of pruning criteria: (1) Similarity: There are some strong similarities among several primary pruning criteria that are widely cited and compared. According to these criteria, the ranks of filters’ Importance Score are almost identical, resulting in similar pruned structures. (2) Applicability: The filters' Importance Score measured by some pruning criteria are too close to distinguish the network redundancy well. In this paper, we analyze the above blind spots on different types of pruning criteria with layer-wise pruning or global pruning. We also break some stereotypes, such as that the results of $\ell_1$ and $\ell_2$ pruning are not always similar. These analyses are based on the empirical experiments and our assumption (Convolutional Weight Distribution Assumption) that the well-trained convolutional filters in each layer approximately follow a Gaussian-alike distribution. This assumption has been verified through systematic and extensive statistical tests.