Abstract:

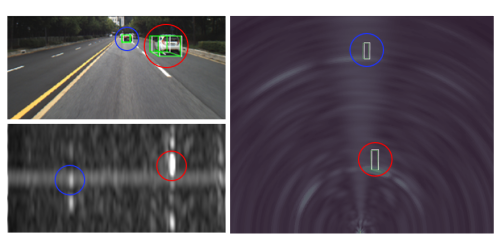

In the last decade, radar is gaining its importance in perception modules of cars and infrastructure, due to its robustness against various weather and light conditions. Although radar has numerous advantages, the properties of its output signal also make the development of fusion scheme a challenging task. Most of the prior work does not exploit full potential of fusion due to the abstraction, sparsity and low quality of radar data. In this paper, we propose a novel fusion scheme to overcome this limitation by introducing semantic understanding to assist the fusion process. The sparse radar point-cloud and vision data is transformed to robust and reliable depth maps and fused in a multi-scale detection network for further exploiting the complementary information. In our experiments, we evaluate the proposed fusion scheme on both depth estimation and 2D object detection problems. The quantitative and qualitative results compare favourably to the state-of-the-art and demonstrate the effectiveness of the proposed scheme. The ablation studies also show the effectiveness of the proposed components.