Abstract:

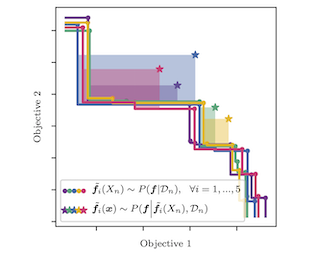

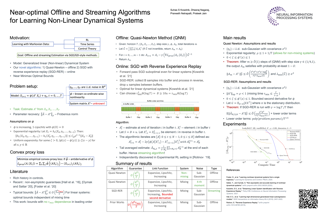

A good seeding or initialization of cluster centers for the $k$-means method is important from both theoretical and practical standpoints. The $k$-means objective is inherently non-robust and sensitive to outliers. A popular seeding such as the $k$-means++ [3] that is more likely to pick outliers in the worst case may compound this drawback, thereby affecting the quality of clustering on noisy data.For any $0 < \delta \leq 1$, we show that using a mixture of $D^{2}$ [3] and uniform sampling, we can pick $O(k/\delta)$ candidate centers with the following guarantee: they contain some $k$ centers that give $O(1)$-approximation to the optimal robust $k$-means solution while discarding at most $\delta n$ more points than the outliers discarded by the optimal solution. That is, if the optimal solution discards its farthest $\beta n$ points as outliers, our solution discards its $(\beta + \delta) n$ points as outliers. The constant factor in our $O(1)$-approximation does not depend on $\delta$. This is an improvement over previous results for $k$-means with outliers based on LP relaxation and rounding [7] and local search [17]. The $O(k/\delta)$ sized subset can be found in time $O(ndk)$. Our \emph{robust} $k$-means++ is also easily amenable to scalable, faster, parallel implementations of $k$-means++ [5]. Our empirical results show a comparison of the above \emph{robust} variant of $k$-means++ with the usual $k$-means++, uniform random seeding, threshold $k$-means++ [6] and local search on real world and synthetic data.